Shilad Sen, Jesse Vig, John Riedl, University of Minnesota

Summary

Tags are ubiquitous on web and this paper explores various algorithms for evaluating tags. Specifically it attempts to find optimum metrics for tag selection, performance of tags based on implicit or explicit user behaviour and performance of each algorithm with rising tag density.

Details

For their research, authors use MovieLens movie recommender website which allows people to tag and evaluate movies and rate other tags using thumbs up or down.

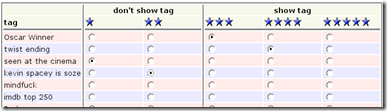

To collect gold standard for tags, authors then asked their active user base to take a survey on tags and feedback. Inspired by prior work, three different metrics were chosen – classification accuracy, precision for top-n and precision of top-n%. While classification ranks a selection algorithm on the classification for all the tags, precision based techniques test accuracy for only the top-n and top-n% tags. Authors chose to proceed with top-n since it was easily interpretable for their tagging system

Authors then evaluated a bunch of implicit and explicit tag selection algorithms. The interesting observations made were:

- Explicit techniques perform remarkably better than implicit ones.

- The popular technique of ranking tags on the number of times they were applied performs fairly well.

- A combination of a variety of implicit and/or explicit techniques outperforms other individual techniques.

- apps_per_movie (Average number of times tag is assigned to a given movie) performs best amongst implicit techniques

- hierarchical_rating (composite algorithm) performs best amongst explicit techniques

Above results were also validated in a user study. Finally it was also observed that with rising tagging activity, the performance gap between implicit and explicit techniques increases although authors did not check it for tags more than 200.

Review

This was a very interesting paper to read. Authors have done very detailed and scientific study of various tagging algorithms although and considered different metrics before finally choosing one. An open question that authors also mention in their future work is whether the actual performance difference between best and popular tagging techniques is visible to normal user.

Disclaimer

The work discussed above is an original work presented at IUI 2009 by the authors/affiliations indicated at the starting of this post. This post in itself was created as part of course requirement of CPSC 436.

No comments:

Post a Comment