Jaime Teevan, Susan T. Dumais, Daniel J. Liebling, and Richard L. Hughes, Microsoft Research

Summary

Web is a dynamic system with content on web pages often changing from one’s last visit. Authors have presented a browser plug-in called DiffIE that highlights these changes on return visits to the same page.

Details

Studies indicate that 50% to 80% of web page visits are revisits and over a period of 5 weeks, 66% of such pages visited would have 20% of their content changed on an average. Often, the purpose of such revisits is to see these very changes. Existing methods to see changes require an explicit action on the part of user for example by visiting Internet archive sites or subscribing to RSS feeds. Site owners can also explicitly highlight changed sections on their websites by various means. The paper presents a user-centric approach of viewing these changes with no explicit action required other than a one-time install of DiffIE browser plug-in.

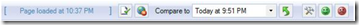

DiffIE is a plug-in developed for IE and is made up of a cache component to cache previous visits to web pages, a comparison component to identify and highlight changes between current and cached version of a page and a toolbar component to present all these features in a nice little GUI. On every page visit, DiffIE hashes text nodes of DOM tree using MD5 algorithm. Each page representation is tied to a cache file using its URL and timestamp. On subsequent revisits, DiffIE compares them with current page and can identify any addition, change or deletion of content. Content additions and changes are highlighted by manipulating content’s background colour scheme. For performance and security considerations, DiffIE ignores very complex DOMs or secure (https://) pages and does not start comparing until the page has finished loading. The toolbar allows you to toggle highlighting, ignore sites for caching, choose the cached version to base comparison on, configure other options and also to provide feedback.

The design of DiffIE was done in an iterative manner with feedbacks from 300 Microsoft employees over two days of using first stable release incorporated into final release. Further, 11 people took part in a two week study where they used DiffIE on their primary work computers and provided their responses in a semi-structured interview. On the basis of this study, it was found that DiffIE could be used effectively as a web monitoring tool, finding un/expected changes or new content. Suggestions for improvement included exploring different ways to highlight changes, highlighting content movement as well and solving the problem of cold start by pre-filling DiffIE cache with versions from Internet or local history archives.

Review

Identifying changes on Internet is definitely a formidable problem for all range of users. The trick however is to identify the changes that would be relevant to user and to present them in an unobtrusive manner. While short of achieving this, DiffIE is definitely a step in the right direction with some interesting features as well as some limitations. There also exists another tool called Check4Change that has a slightly different technique for solving the same problem.

Disclaimer

The work discussed above is an original work presented at UIST 2009 by the authors/affiliations indicated at the starting of this post. This post in itself was created as part of course requirement of CPSC 436.