Daisuke Sakamoto et. al., The University of Tokyo

Summary

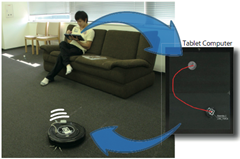

A sketch/stroke based interface for controlling robots.

Details

Of late, humanoid robots have grew in terms of range of things that they can achieve, walk, climb, play and what not. While high cost and limited features remain a big deterrent for such robots, an intuitive way to control these robots also remains a problem. Authors have tried to build a high level sketch based interface for controlling a popular vacuuming robot iRobot Roomba

An array of ceiling cameras were used to provide a more meaningful and accurate live top view of a room. ARToolkit was used to detect objects and some standard stroke gestures were mapped to robot movements for example a cross means stop. In pilot test, users entered these stroke gestures on a computer screen showing the live view from cameras. It was observed that subjects were able to use the robots without any prior knowledge of robots. Moreover the interface allows asynchronism between command and execution.

Review

The application is very nifty and can have certain obvious advantages over speech based interface for controlling robots. However it would not completely replace it as certain actions are more easily spoken than sketched and speech can utilise pre-existing language constructs while sketch requires users to remember/create their sketch commands. I believe authors would face these limitations of a sketch interface as soon as they move beyond vacuuming robots.

Disclaimer

The work discussed above is an original work presented at CHI 2009 by the authors/affiliations indicated at the starting of this post. This post in itself was created as part of course requirement of CPSC 436.

Yeah I thought the overhead camera control had limited usage capabilities when it comes to other robots.

ReplyDeleteBut for the roomba itself, I think it's a great idea and a huge improvment in controls.

That said, I wish they could have made some easier controls.